FRC Day 22 Build Blog

Day 22: Finishing Up The Field

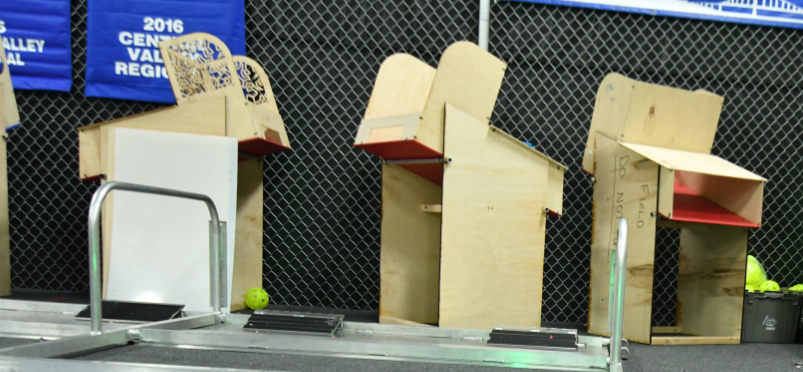

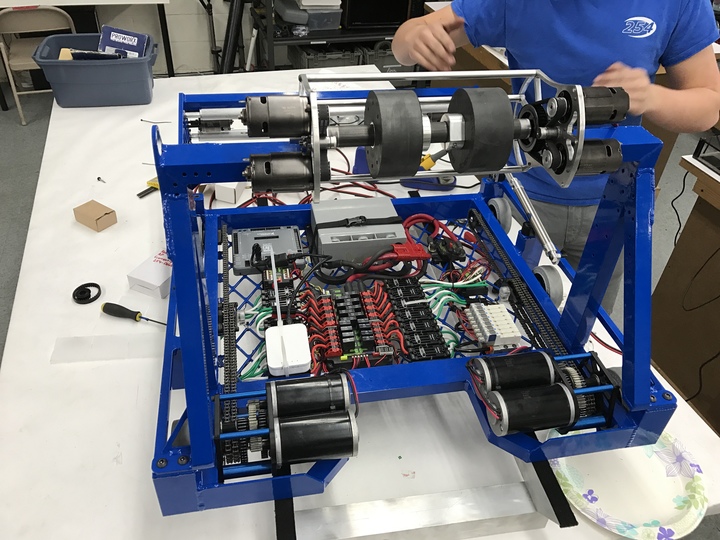

Robot Progress

Today was a productive manufacturing and assembly day even though we didn't have our anodized parts back. We took off the superstructure today to make holes to run wiring from the shooter and feeder motors and encoders from the top of the superstructure to the drivebase. We began fishing wires, but were not able to finish because we have to add more holes to the superstructure to mount the feeder roller plates. Tomorrow we should have several parts back from anodizing and we can begin to assemble several subsystems including the intake, superstructure, shooter, and feeder. Manufacturing will also continue on the hopper polycarb pieces.

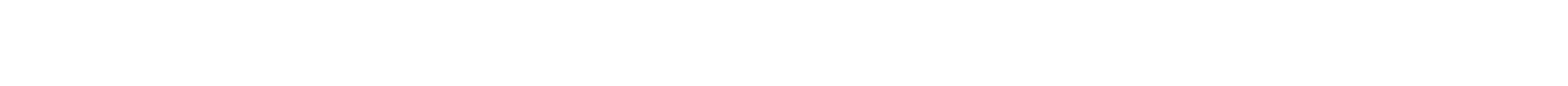

Field Construction

Today we finished up all 6 of the feeder stations for the field. We also changed all the plastic from the previously used HDPE to the new official colored plastic, which allows gears and balls to slide down it much more easily. We plan to continue with field work, with the next tasks being to finish the more complex airships and assemble the field side walls/driver stations.

Programming

We made leaps and bounds towards cleaning up our Github codebase and making work far easier Previously, our codebase had sprawled across multiple branches, each with their own versions of essential robot code, which made collaboration difficult. We merged the latest autonomous and camera code into the master branch. In the future, we will ensure that all code remains within three commits of the Master branch, and we will pull far more frequently.

We also began integrating the code for the Nexus phone camera system, as well as the robot state machine, into our codebase. The Nexus vision system was used with great success last year but is far more complicated than our PixyCam system. We will test the PixyCam again, using the central point on the top segment instead of the centroid (a far more consistent, reliable means of estimating the distance), and the accuracy/reliability will determine whether we use the PixyCam or the Nexus phone.

The drivebase PID control was tuned for our unweighted robot drivebase to compensate for mechanical differences. Previously, we set a constant voltage to the motor to control the robot’s speed, called open-loop control. This does not take into account different mechanical differences, like gearbox lubrication or bits of carpet stuck in gearboxes (a problem we saw). Now, instead of swerving, the drivebase drives in a straight line!

Finally, we began work on integrating an infrared laser distance sensor into our robot code. The sensor turns on a high-power infrared laser beam and measures the time taken for the beam to reflect back to a detector, then uses that information to calculate the distance between the sensor and the object. This “time of flight” sensor has great accuracy, and we envision using it in conjunction with our camera system to accurately measure distances and aim appropriately.